Digital representation and experimental simulation of nonverbal behavior

G. Bente

Department of Psychology, University of Cologne, Cologne, Germany

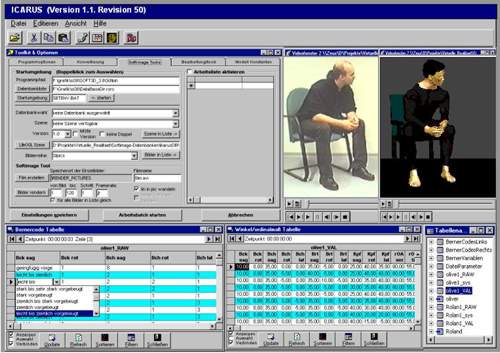

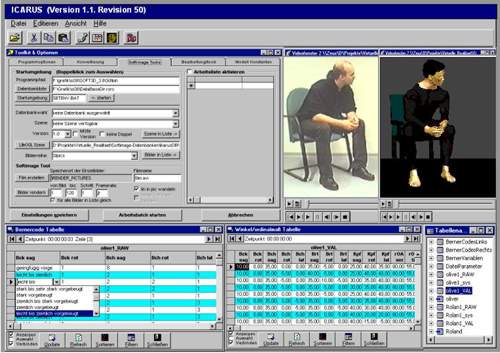

Nonverbal behavior research has significantly advanced methodological knowledge in the area of behavior registration and measurement during the last two decades. Based on standard video technology a series of high-resolution transcription procedures and coding strategies have been developed that provide detailed and accurate protocols for both body movement and facial behavior. Moreover, tools for automatic behavior registration have been introduced to the field recently, using sophisticated measurement devices such as data suits, infrared and ultrasonic sensors, and video based pattern recognition techniques for data acquisition. Automatic measurement devices provide fast access to high-resolution data, which can be easily re-transformed into moving objects by any standard 3D-animation tool. However, they mostly require the application of specific sensors or markers and thus may negatively influence the ecological validity of the observations made. Video-based coding procedures on the other hand are time consuming or lack the descriptive accuracy required for any computer animation. As most automatic motion capture devices share a common geometry with the professional 3D-animation tools, it may seem appropriate to refer to their generic 3D geometry when constructing a compatible transcription language for video based analysis. However, a closer examination revealed that describing an object's spatial attributes in terms of translations and rotations along the axes of a three-dimensional coordinate system is very uncommon and hard to apprehend for the human observer. Thus it is not only difficult for the investigator to interpret motion capture data, it is also nearly impossible to edit such behavior records in order to experimentally control particular nonverbal patterns in effect studies. It became apparent that the implicit geometry of existing high resolution transcription systems - such as the Bernese System for Time Series Notation of Movement Behavior (BTSN; [2]) - could best be understood in terms of projection angles of an object's local axes rather then in terms of generic rotation angles. Based on this insight, algorithms could be formulated allowing for the translation of phenotypical projection codes (BTSN) into generic rotation angles that can be used as an input for professional 3D-animation platforms and vice versa. This conversion utility is at the core of a hardware and software platform, which we developed for the interactive coding, editing and experimental computer simulation of movement behavior [1]. A screenshot of the editing tool is shown in Figure 1. Basic features of the new methodology are described and implications for descriptive analysis and experimental computer simulation of nonverbal behavior are discussed.

Figure 1. Screen representation of the interactive coding and rendering software.

Paper presented at Measuring Behavior 2000, 3rd International Conference on Methods and Techniques in Behavioral Research, 15-18 August 2000, Nijmegen, The Netherlands