Action unit recognition in spontaneous facial behavior

J. Cohn1, J. Xiao2, T. Moriyama2, J. Gao2, Z. Ambadar1, T. Kanade2

1Clinical Psychology Program, University of Pittsburgh, Pittsburgh, PA, U.S.A.

2Robotics Institute, Carnegie Mellon University, Pittburgh, PA, U.S.A.

Within the past decade, there has been significant effort toward automatic recognition of human facial expression using computer vision. Several such systems have recognized under controlled condi-tions a small set of emotion-specified expressions, such as joy and anger. Others have achieved some success in the more difficult task of recognizing FACS action units. Action units represent the smallest visible change in facial expression.

Our interdisciplinary group of psychologists and computer scientists has developed a system that rec-ognizes 18 of approximately 30 action units that have a known anatomic basis and occur frequently in emotion expression and nonverbal communication. The action units are recognized whether they oc-cur alone or in additive or non-additive combinations. A limitation of this and related research in automatic facial expression recognition is that it is limited to deliberate facial expression recorded under controlled conditions that omits significant head motion and other factors that can confound feature extraction.

Automatic recognition of facial action units in spontaneously occurring facial behavior presents sev-eral technical challenges. These include rigid head motion, non-frontal pose, occlusion from head motion, glasses, and gestures, talking, low intensity action units, and rapid facial motion. Version 3 of our Face Analysis System addresses these challenges. The system recovers full head motion (6 df), stabilizes facial regions, extracts motion and appearance information, and recognizes important facial actions (e.g., eye blinking).

The precision of recovered full head motion was evaluated with ground truth obtained by a precise position and orientation measurement device and found to be highly consistent. The full system was tested in video data from a spontaneous 2-person interview. Eye blinking (AU 45) was chosen for analysis because of its importance in psychology and neurology and its applied importance to detec-tion of deception. Eye blinking occurred with relatively high frequency (167 occurrences in 10 sub-jects of diverse ethnic background) and was reliably coded by human FACS experts for comparison with the face analysis system.

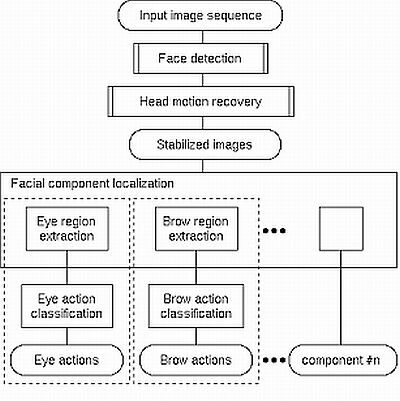

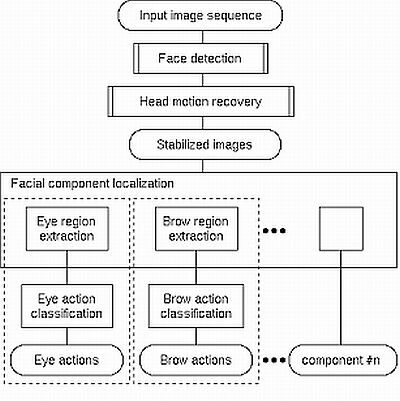

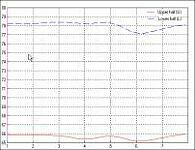

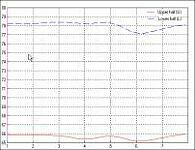

Figure 1 depicts an overview of the face analysis system. A digitized image sequence is input to the system. The face region is delimited in the initial frame. Full head motion (6 DOF) is recovered automatically using dynamic templates and a cylindrical face model. Using the recovered motion pa-rameters, the face region is stabilized. An example of system output is shown in Figure 2. Luminance values in the eye region are used for blink recognition (See Figure 3). The system recognized blinks (AU 45) with 98% accuracy in spontaneous facial behavior. Reliable and precise compensation of head motion was critical to AU recognition.

Figure 1. An overview of the face analysis system.

Figure 2. Automatic recovery of 3-D head motion and image stabilization: (A) Frames 1, 10 and 26 from original image sequences; (B) Face tracking in corresponding frames; (C) Stabilized face image; and (D) Localized face region.

Figure 3. Examples of luminance curves for blink (left) and non-blink (right).

Paper presented at Measuring Behavior 2002, 4th International Conference on Methods and Techniques in Behavioral Research, 27-30 August 2002, Amsterdam, The Netherlands

© 2002 Noldus Information Technology bv