Subjective duration assessment:

an implicit probe for software usability

E. Cutrell, M. Czerwinski and E. Horvitz

Microsoft Research, Redmond, WA, U.S.A.

A classic problem in software usability engineering is that direct assessment of satisfaction is frequently confounded by an inherent positive bias [1]. We describe a new procedure for gauging users' difficulties with tasks, interfaces and situations, which we refer to as subjective duration assessment. The method centres on the use of time estimation to characterize performance. We introduce relative subjective duration (RSD), a measure that captures the difficulty that users have with performing tasks, without directly asking them. Although there are several applications of RSD, we focus on using it to probe users' experiences with software. RSD is based on earlier psychological studies on time estimation, which demonstrated that when engaging tasks are interrupted, participants tend to overestimate how long those tasks take when compared to the actual task times. Conversely, tasks that are completed tend to be underestimated in terms of the overall task times [2,3]. We explored the value of time estimation as a measure for evaluating task performance in human-computer interaction (HCI). Our hypothesis was that participants would overestimate the duration of tasks they were not able to complete on their own. In contrast, they would underestimate the duration of tasks completed successfully.

To explore this idea, we performed a standard usability study as an iterative test of the usability of an Internet browser. We included seventeen typical browsing tasks, such as account maintenance, playing videos and songs, searching, sending instant messages, reviewing and composing e-mail, and performing calendar activities on the web. Dependent measures included task success rates with and without experimenter intervention, completion times, participants' estimates of how long each task took, and overall user satisfaction ratings.

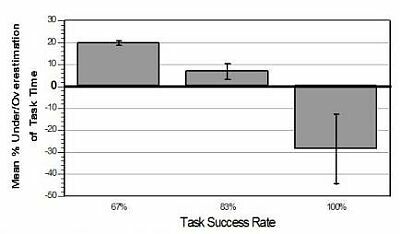

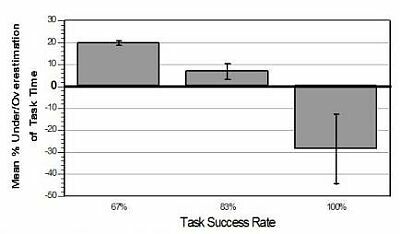

We found that, despite the fact that success rates without experimenter intervention were quite low (<60%), satisfaction ratings were very high. In fact, 17 out of the 19 questionnaire items were rated above average. Clearly, user satisfaction and performance were not well correlated. In contrast, users' estimated task times tracked their performance quite well. Participants reliably underestimated tasks with high success rates, and reliably overestimated the lengths of tasks that had lower success rates. A summary of the relationship between the actual versus estimated task time findings is shown in Figure 1.

Figure 1. Over- and underestimation of task times by task success rate (negative y-values are underestimates; positive y-values are overestimates).

We believe that RSD is an interesting implicit measure for usability that can be used to probe user satisfaction versus frustration with tasks. As participants do not necessarily know ahead of time what the experimenter expects from the time estimates, they are less likely to bias their time estimates toward a positive response.

References

Paper presented at Measuring Behavior 2002 , 4th International Conference on Methods and Techniques in Behavioral Research, 27-30 August 2002, Amsterdam, The Netherlands