Reliability of selected performance analysis systems in football and rugby

N. James, N.M.P. Jones and C. Hollely

Sports Science, University of Wales at Swansea, Swansea, United Kingdom

It has been suggested that 70% of 72 behavioral analysis research papers examined did not report any reliability and, of those that did, most used inappropriate statistical techniques [1]. The purpose of this study was to assess, in terms of reliability, the analysis procedures for two invasion games, football and rugby. Both games involve many behaviors that are subtly different and can cause confusion for the untrained observer. In football, for example, a tackle may be deemed unsuccessful when the ball goes to another opposition player, but since the ball carrier was deprived of the ball, some form of success was achieved. This can result in confusion, and hence observational errors, unless the behavioral definition for the tackle is very specifically worded.

In this study, operational definitions of behaviors were formulated and configurations set up using The Observer. Once the two configurations were complete, matches were coded. The adaptability of The Observer meant that configurations and operational definitions could be amended match by match, as the need arose. This took eight matches for rugby, where 38 anomalies occurred (79% were operational definitions, 13% configuration changes and 8% remained irresolvable), but only two matches for football, where all 9 changes involved the configuration. This difference was largely due to the rugby configuration being used to provide weekly feedback to a professional team, whereas the football configuration was used solely for research purposes. Hence, there was more time pressure to complete the rugby configuration, resulting in less robust operational definitions than for the football. The unique difficulties in observing behaviors in each sport would have also contributed to other errors, however.

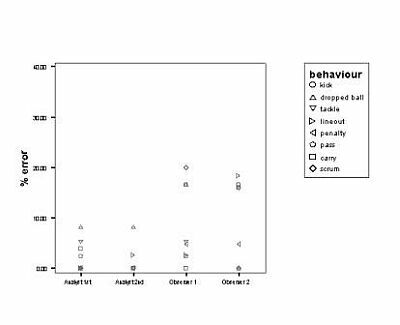

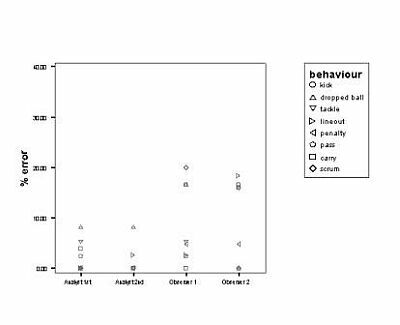

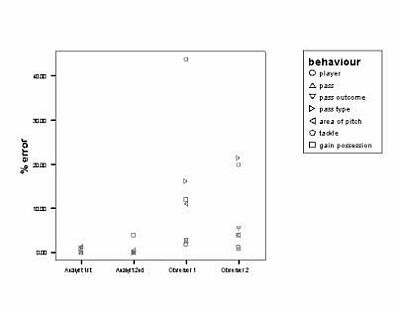

Intra- and inter-operator reliabilities were calculated using the percentage error for each variable [1], producing a powerful image of the error for each variable (Figures 1 & 2). Two trained analysts (with >100 h of system experience) recorded low errors (<8.5%) for all variables in both trials, but two less experienced observers made far more errors on some variables (15-20% in rugby, 11-44% in football). They either failed to label behaviors correctly (52.1% of errors in rugby; 49.6% in football), or failed to record the behavior altogether.

Figure 1. Errors for each behavior in the rugby analysis.

Figure 2. Errors for each behavior in the football analysis.

This study implies that operators must be trained sufficiently in linking the recording process with operational definitions, and that their skill levels must be tested using methods similar to these. High motivation is required to minimise lapses in concentration that result in certain behaviors being missed. Individual behaviors vary in terms of associated error, but all are within acceptable limits.

References

Paper presented at Measuring Behavior 2002 , 4th International Conference on Methods and Techniques in Behavioral Research, 27-30 August 2002, Amsterdam, The Netherlands