Face expression detection and synthesis using statistical models of appearance

H. Kang, T. Cootes and C. Taylor

Imaging Science and Biomedical Engineering, University of Manchester, Manchester, United Kingdom

Face expression change has been recognized as one of the major sources of variation in face modelling. It can significantly change the appearance of a face and degrade the performance of face recognition systems. Statistical models of appearance, which explicitly represent the variation in shape and texture of face images, can be used to encode a face in a compact set of parameters. In this paper, we describe methods of analysing face expressions using such a facial appearance model.

Statistical models of appearances have been widely used for recognition, tracking and synthesis of many different objects. They contain a statistical model of the shape and grey-level appearance of the object of interest which can 'explain' almost any valid example in terms of a compact set of model parameters. Such models have shown its good performance in modelling face variations and in synthesizing photo-realistic reconstructions [1,2,3].

Using simple linear regression on a suitable training set of static images, we learn the relationship between different expressions and the parameters of the model. We consider five typical face expressions (neutral, smile, frown, surprise and winking).

Giving a new face image, a set of model parameters can be obtained with the model, and the degree of each of the represented facial expressions can be computed using the learnt relationships. Preliminary results show that this method can effectively separate each face expression and can potentially be used for measuring face behavior.

A facial appearance model has been built from a face image database which contains multiple images of 100 people, including a variety of posed expressions such as smiles, frowns and looks of surprise. The analysis leads to a parameterised model with 349 modes controlling facial appearance.

The first three modes are displayed in Figure 1.

Figure 1. The first three modes of the face appearance model.

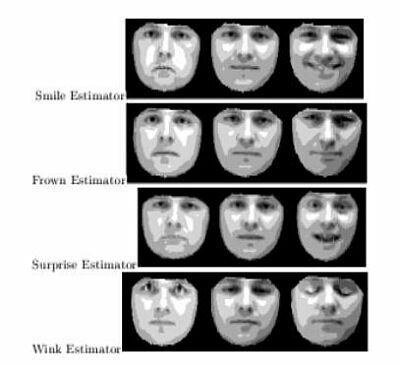

We use linear regression to learn the relationship. Four expression estimators were built. The Figure 2 shows expressions synthesised using the learnt relationship.

Figure 2. Expression estimator values vary between four standard expressions.

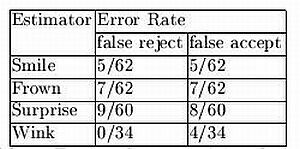

Using these expression estimators as classifier, the facial expression on a new image can be detected. Table 1 shows the test result over training data set. The threshold was set at 0.5. The false reject means that one of the expressions has been recognized as neutral. The false accept means that neutral face has been recognized as one of the expressions. For example, in the row of smile and column of false reject, the number 5/62 means there are 62 smile faces have been tested and 5 of them has been detected as neutral.

Table 1. Test results obtained using a training data set.

This demonstrates that simple linear relationships can provide a good model of expression change, and can be used for both expression synthesis and recognition. We are investigating extend the approach to image sequence, which we anticipate will give more reliable results.

Paper presented at Measuring Behavior 2002, 4th International Conference on Methods and Techniques in Behavioral Research, 27-30 August 2002, Amsterdam, The Netherlands

© 2002 Noldus Information Technology bv