Methodology and tools based on video observation and eye-tracking to analyze facial expression recognition

B. Meillon1, J.M. Adam2, B. Meillon2, M. Dubois3 and A. Tcherkassof4

1CLIPS IMAG, Grenoble CEDEX 9, France

2Laboratoire CLIPS IMAG, UJF, Grenoble, France

3Laboratoire LPS, UPMF, Grenoble, France

4Laboratoire de Psychologie Sociale, Grenoble, France

Our project concerns coaching activities that require the supervisor to visualize the face(s) of their partner(s) [1]. Applied examples of such situations are remote assistance, remote teaching and video conferences. Our goal is to find software solutions to help supervisors with their task, by providing them with information about the emotional state of their partner(s). We study how a supervisor proceeds to classify an emotion, and which parts/areas of the face (e.g. lips, eyes) are most important for identifying emotions [2,3,4].

The first step of this project was to acquire some raw material. We did this by videotaping students working on three different, attention-demanding tasks: one pleasant, one neutral and one unpleasant. The videotaping was done via a hidden camera, and a total of 44 students was filmed. The second step involved asking observers to view these videotapes and identify the emotions they recognized. For each emotion recognized, the observer had to click on the corresponding inerface button (Figure 1). Using this method avoided any interruption of the videotape. Data were collected from 240 observers. The third step was to objectively analyze the eye movement strategies of the observers (n = 80). We used an eye-tracker and specially-developed software to monitor and record eye movements and analyze the results. The analysis of eye movement patterns provided information about which facial areas held the observers' focus of attention, along with some spatial and temporal metrics.

Figure 1. Interface for emotion recognition.

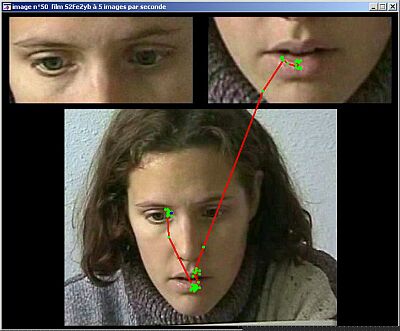

The software displays the scan path, superimposed over the videotape. Two different visualization modes have been implemented:

Figure 2. Raw measurement points.

Figure 3. Fixation points.

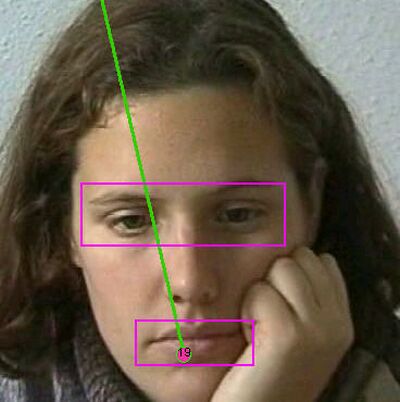

The software can also define regions of interest on the video (Figure 4) and make calculations relating to each region, e.g. the number and duration of fixations.

Figure 4. Regions of interest.

References

Paper presented at Measuring Behavior 2002, 4th International Conference on Methods and Techniques in Behavioral Research, 27-30 August 2002, Amsterdam, The Netherlands