Benchmarking precision in the real world

R.R. Plant, P. Quinlan, N. Hammond and T. Whitehouse

Department of Psychology, University of York, York, United Kingdom

There is little doubt that the majority of today’s high-speed, high-specification hardware and operating systems are capable of real-time data collection [1,4]. The caveat is the word 'capable'. As many researchers have noted, the dominance of multitasking operating systems makes the picture murky at best [2,3].

To date, research has concentrated on the raw performance of platforms and operating systems. Many studies have investigated suitability for high-precision timing by using programs that examine the performance of repeating a simple operation within a tight timing loop (e.g. [4]). The effect of stressing the operating system with a background task is a widely accepted variable. Such research, whilst providing a solid baseline, leaves researchers in the field with the fundamental question: 'How does my own paradigm perform in the real world?'.

Until now, this has been a question that has remained extremely difficult to answer. Complete real-world paradigms can often be extremely complex, making use of both visual and auditory stimuli and requiring complex patterns of responses from subjects, with the more being done, the greater the likelihood of lower timing resolution. It is acknowledged that the hardware running the experiment will also play a large factor. What is needed, then, is a rig that can test the majority of paradigms in situ and without modification.

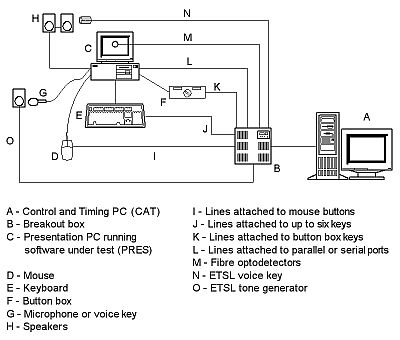

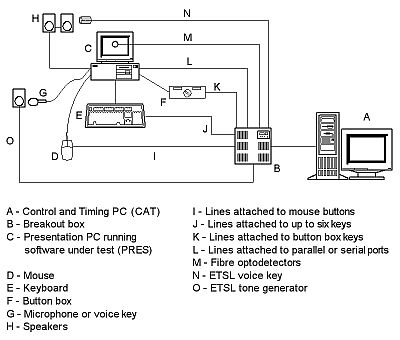

To this end, we have developed a generic rig which can be used to control and time a second machine that is being used for presentation – that is, a machine running the experimental paradigm under test (Figure 1). The results of testing can be used to inform a researcher how well their hardware, experimental software and paradigm have performed as a cohesive unit.

Figure 1. An overview of the ETSL benchmarking rig.

In this paper, we report on the test rig we have developed and how this was used to test a working psycho-acoustical paradigm in situ. Within this real world paradigm, we assessed (with sub-millisecond precision): visual presentation onset and duration accuracy; synchrony between audio tones and visual stimuli; tone duration; and the accuracy of response registration. This enabled us to compare actual events with those programmed in to, and recorded by, the experiment generator used (in this case, PsyScope running on a Mac Performa 630).

This study has been conducted under a funded project called the Experimental Timing Standards Laboratory (ETSL). See http://www.psychology.ltsn.ac.uk/ETSL/

References

Paper presented at Measuring Behavior 2002 , 4th International Conference on Methods and Techniques in Behavioral Research, 27-30 August 2002, Amsterdam, The Netherlands