Facial Expressions Analysis of Human and Animal Behavior

Organisers: Moi Hoon Yap (Manchester Metropolitan University, UK, Chair), Adrian Keith Davison (University of Manchester, UK) and Daniel Leightley (King’s College London, UK)

Short name (in EasyChair): Facial Expressions (S9)

Description

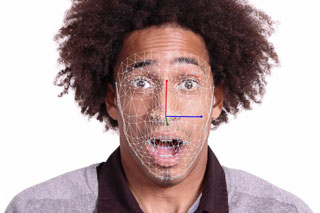

Face and gesture reveal one's health, age, emotion/feelings, intention and is a vital biometric characteristic. Tremendous research is being carried out in the fields of psychology, physiology and computer vision/technology. This symposium focuses on datasets/data sharing, technological challenges in analysis/measurement of human and animal facial expressions and its implication of behaviors to real-world applications. We aim to promote interactions between researchers, scholars, practitioners, engineers and students from across industry and academia on facial expressions analysis. Cross-discipline work is highly encouraged. We welcome original works that address a wide range of issues including, but not limited to:

- Analysis of facial expressions for real-world applications

- Subtle/micro face and gesture movements analysis

- Technology in automated behavior measurement

- Machine learning and deep learning in face and gesture analysis

- Real-time face and motion analysis

- Face and motion recognition on mobile devices

- Pain assessment on veterinary sciences

- The ongoing challenges of subtle motion analysis

- Behavior technology in-the-wild

10:00-10:20 Tiffany Drape and Stacy Vincent

Measuring Response to Racial Bias Among Preservice Teachers During a Class Intervention

10:20-10:40 Daniel Leightley

Modelling attributes of common mental health disorders in adolescents using audio-visual data

10:40-11:00 Gemma Stringer, Laura Brown and Moi Hoon Yap

Taking Research to the Community: An Initial Protocol for the Experimental Measurement of Emotional Expression in People with Dementia

11:00-11:30 Coffee

11:30-11:50 Cristina Palmero, Elsbeth van Dam, Sergio Escalera, Mike Kelia, Guido Lichtert, Lucas Noldus, Andrew Spink and Astrid van Wieringen

Automatic mutual gaze detection in face-to-face dyadic interaction videos

11:50-12:10 Maheen Rashid, Sofia Broomé, Pia Haubro Andersen, Karina Gleerup and Yong Jae Lee

What should I annotate? An automatic tool for finding video segments for EquiFACS annotation

12:10-12:30 Andreas Maroulis

Baby FaceReader AU classification for Infant Facial Expression Configurations

12:30-14:00 Lunch

14:00-14:20 Pia Haubro Andersen, Karina Bech Gleerup, Jennifer Wathan, Britt Coles, Hedvig Kjellström, Sofia Broomé, Yong Jae Lee, Maheen Rashid, Erika Rosenberg, Claudia Sonder and Deborah Forster

Can a Machine Learn to See Horse Pain? An Interdisciplinary Approach Towards Automated Decoding of Facial Expressions of Pain in the Horse

14:20-14:40 Luís M. Cunha, Célia Rocha and Rui Costa Lima

A new approach to analyse facial behaviour elicited by food products: Temporal Dominance of Facial Emotions (TDFE)

14:40-15:00 Karen Lander, Judith Bek and Ellen Poliakoff

Measuring recognition of emotional facial expressions by people with Parkinson’s disease using an eye-tracking methodology

15:00-15:20 Discussion

.jpg)