FaceReader: new developments in facial expression analysis

Organizers: Tim den Uyl (VicarVision) & Hans Theuws (Noldus Information Technology)

Description: The interest for methods that enable automatic analysis of emotions continues to increase. This trend is evident in different applications areas: psychology, consumer behavior, market research, human factors, usability, etc. Detecting emotions by analyzing facial expressions offers a non-invasive solution. FaceReaderTM is one of the software tools available for analysis of facial expressions.

FaceReader automatically analyzes the basic facial expressions (neutral, happy, sad, angry, disgusted, surprised, scared and contempt) from video, live, or from an image. When analyzing one or more participant videos or image files, values can be visualized as bar graphs, in a pie chart, and as a continuous signal. Another graph displays the negativity or positivity of the emotion (valence). A separate reporting window displays a pie chart with percentages, a smiley, and a traffic light, indicating whether a person’s mood is positive, neutral, or negative. All visualizations are given real-time and may be viewed afterwards.

The very first version of FaceReader that allowed real-time facial expression analysis dates from 2005. Since then developments to increase functionality and improve performance have continued. During this demonstration we would like to introduce a number of new progresses, including:

- Physiological measures can give significant extra insights but traditional measures are intrusive, relatively expensive and difficult to apply remotely. We have therefore developed and integrated a remote heart rate measure into FaceReader, based on remote Photo-plethysmography (PPG). This is a technique by which small changes in color caused by the pulse of arterial blood passing through capillaries just under the skin’s epidermis is measured and used to determine the subject’s heart rate.

Especially for subjects or situations where there is little variation in facial expressions, heart rate can be a useful additional indication of arousal. An increase in heart rate does not indicate if this is due to a positive (e.g. surprise) or negative (e.g. fear) emotion, but does help to assess its magnitude. Furthermore, derived values such as heart rate variability give insights into stress and workload.

- Analysis improvements have been achieved by implementing a Deep Learning model which will work alongside the current modeling. This learning system does not need to build a complete mesh in order to model the face and therefore can handle occlusions and challenging light conditions better.

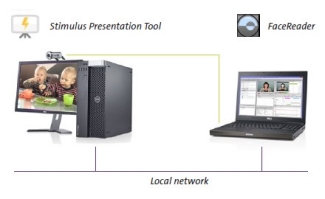

- In many applications, one or more stimulus movies are shown to test participants while their expressions are being analyzed. Previously, a test leader had to trigger a stimulus marker and start the stimulus movie manually. The Stimulus Presentation Tool automatically synchronizes displaying the stimulus movie to a test participant with the stimulus trigger in a FaceReader project. The stimulus presentation can be done on the computer where FR is running, or on a separate, connected computer.

- FaceReader is now compatible with N-Linx, the new standard protocol for integrating systems for behavioral research. This makes it easier to connect for example FaceReader and The Observer XT for real time control, synchronization and data exchange in combination with other applications such as eye trackers or DAQ systems.